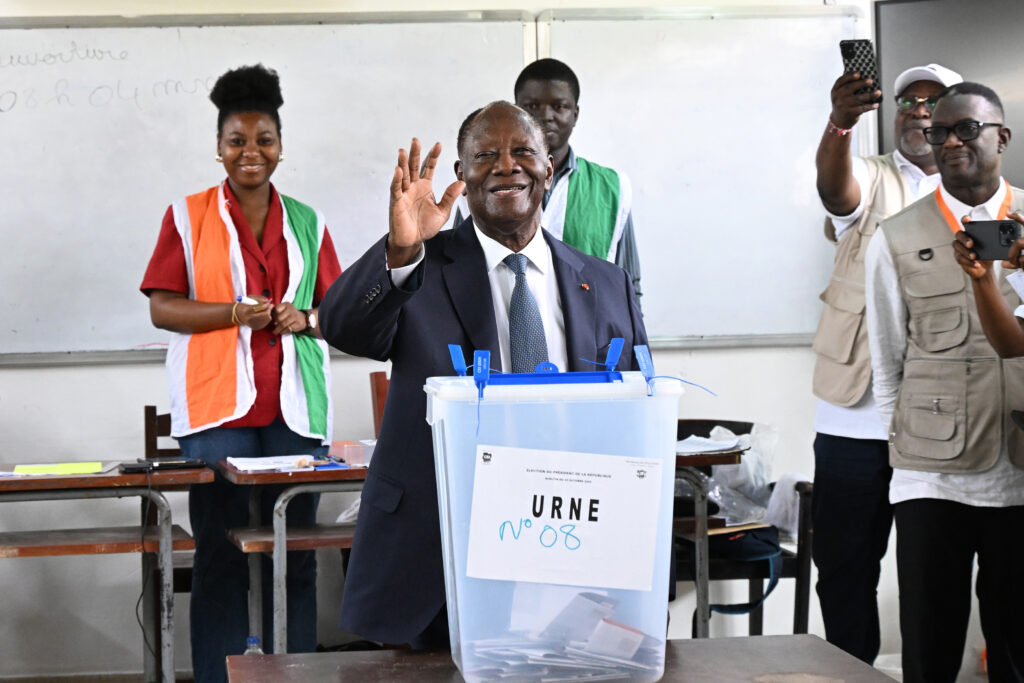

Alassane Ouattara se dirige vers un quatrième mandat et une victoire écrasante à la présidentielle en Côte d’Ivoire, où les résultats sont attendus lundi après-midi, après un scrutin calme dont ses deux principaux opposants étaient exclus.Samedi, près de neuf millions d’électeurs étaient convoqués aux urnes dans ce pays ouest-africain, leader mondial de la production de cacao, et qui résiste aux putschs et attaques jihadistes secouant la région. Les derniers résultats, égrenés département par département, par la Commission électorale indépendante (CEI), sont attendus pour la mi-journée, avant la proclamation du vainqueur dans l’après-midi.Le suspens est inexistant: Alassane Ouattara, 83 ans, va l’emporter avec un score fleuve, selon les résultats déjà rendus publics depuis dimanche. Le chef de l’Etat, au pouvoir depuis 2011, est en tête dans toutes les circonscriptions.C’est au nord, région à dominante malinké, l’ethnie de M. Ouattara, qu’il a fait carton plein, comme à chaque présidentielle.98,44% à Séguela, 99,7% à Kani ainsi que dans son fief de Kong, 98,1% à Ferkessedougou ou encore 97,8% à Sinématiali, à chaque fois avec une participation approchant les 100% dans ces zones rurales du pays. – “Légitimité” -Dans le sud et l’ouest, où beaucoup de bureaux étaient déserts samedi, les chiffres de participation étaient bien plus faibles, mais “ADO” y est aussi en tête, comme à Gagnoa, ancien fief de son vieux rival Laurent Gbagbo, où il obtient 92% mais avec une participation de 20%.Deux facteurs expliquent cette faible mobilisation dans ces régions, selon les observateurs: le climat politique tendu qui a prévalu pendant la campagne et l’absence des deux principaux leaders d’opposition, l’ex-président Laurent Gbagbo et le banquier international Tidjane Thiam. Tous deux ont été écartés du scrutin et radiés des listes électorales, le premier pour des problèmes de nationalité, le second pour une condamnation pénale.”Leur absence, leurs appels à ne pas participer au scrutin et le climat de tension qui s’est détérioré au cours des derniers jours laissaient présager une démobilisation significative de l’électorat”, souligne William Assanvo, chercheur à l’Institut des études de sécurité (ISS). Déplorant dimanche soir une “communauté internationale restée silencieuse” et un régime “qui a érigé la répression et la peur (…) en stratégie électorale”, leurs partis, réunis au sein du Front commun, dénient d’ores-et-déjà “toute légitimité” à Alassane Ouattara et ont réclamé de nouvelles élections. Près de 44.000 membres des forces de l’ordre étaient déployés sur tout le territoire et le pouvoir avait interdit les manifestations du Front commun en octobre, procédant à des centaines d’arrestations pour troubles à l’ordre public notamment.- “Apaisé” ou “divisé” -Quatre adversaires étaient toutefois en lice, dont l’ex-Première dame Simone Ehivet Gbagbo, mais aucun n’a de chance d’arriver à un second tour, faute de soutien d’un grand parti ou de moyens financiers importants.L’un de ces candidats, l’entrepreneur Jean-Louis Billon, a déjà félicité Ouattara pour sa victoire, s’alarmant cependant de la “très faible participation dans certaines régions”. Si le scrutin s’est déroulé globalement dans le calme, des incidents ont été signalés dans 2% des lieux de vote, soit environ 200 endroits, selon un bilan des forces de l’ordre transmis à l’AFP.Des heurts ont éclaté dans plusieurs localités du sud et de l’ouest, mais sans “incidence majeure sur le déroulement du scrutin”, selon le ministre de l’Intérieur Vagondo Diomandé.L’élection présidentielle est toujours synonyme de tensions politiques et intercommunautaires dans l’esprit de nombreux Ivoiriens, après les scrutins de 2010 (3.000 morts) et 2020 (85 morts).Deux localités du centre-ouest, Nahio et Nyamayo, dans la région du Haut-Sassandra, ont connu des affrontements intercommunautaires le jour du vote qui ont fait trois morts, de sources sécuritaires et gouvernementale qui précisent que le calme est revenu lundi.Un adolescent de 13 ans a par ailleurs été tué “par un tir” provenant d’un véhicule de transport en commun à Gregbeu, autre localité de la région, selon la source sécuritaire.Au total, huit personnes sont mortes depuis mi-octobre en marge du processus électoral, dont quatre le jour du scrutin, sept selon l’opposition. Lundi, Abidjan a retrouvé une activité quasi-normale après un week-end électoral où la capitale était inhabituellement déserte. “Les Ivoiriens ont dit NON aux prophètes de malheur”, barrait la Une du Patriote, journal pro-Ouattara, saluant “une Côte d’Ivoire debout pour un scrutin apaisé”. Un quotidien d’opposition, Notre voie, pointait lui à l’inverse “un scrutin à l’image d’un pays divisé.”